Almost everyone in the world has social media. People can freely access videos on whatever platform they like. Videos have long been a part of our lives. Whether it’s for entertainment, news, or school. We watch them to laugh, to learn, to keep up with the world. I’m wondering: How can we continue to trust what we see if AI-generated videos are taking over?

In the past few months alone, the rise of AI has been insane. At first glance, these videos seem completely normal. They look real, human, but they’re not. They’re synthetic, Inauthentic, off, and sometimes, they’re downright scary.

The Danger of Hyper-Realistic AI Videos

Multiple videos are surfacing online lately. Hyper-realistic AI deepfakes, a form of AI-generated content that resembles life-like. These videos can deceive anyone, tricking them into believing false narratives, especially from a form of news or celebrity statements. Some people can call out this fake-generated content. However, older adults (60+) or people who aren’t tech-savvy are deeply impacted. Many of them rely on Facebook to receive their daily news, and often they lack the digital skills to differentiate between what’s fact and what’s fiction. A dangerous situation because they unintentionally spread manipulated information or make real-world actions based on hyper-realistic AI videos.

I can agree, AI videos can be used for good. In like manner, it’s used for more harm than good. If your face or voice is online, you are at risk. It doesn’t matter if your content is structured or innocent. You will be next. With a single video, someone can use AI to recreate your new “character” like in the video game SIMS, which makes you do and say anything. All without your knowledge or consent. How scary is that? After these are created, they can be used to spread misinformation, impersonate people in scams, or even destroy others’ reputations. For instance, look at PBS News, which covered the potential danger behind AI. In the wrong hands, this is a weapon. The deepfakes are only getting better, making it hard to tell what’s real.

One of the darkest uses of AI technology is the creation of child exploitation content. Even when no real child is being physically harmed, AI can be used to generate disturbing and realistic images or videos that depict minors in a sexualized way. This is sad, sickening, unethical, and overall, deeply damaging to the well-being of society. Does Hugh Nelson sound familiar? Well, it should. He was detained in 2024 for selling and making AI sexual abuse images of children under 13. He was the administrator of a pedophile chat room. In an interview, he said, “I’m sexually attracted to some kids.” How disturbing for him to use real images of innocent children to generate those images. The dark part is that he sends content in chat rooms for the exchange of images. According to Manchester News, he would be compensated €5,000 over 18 months of work. Moreover, AI is celebrated for its creative potential. Nevertheless, this should be a reminder that scary people exist, and they actively participate in wrongdoing. A global matter that should be taken seriously.

The Challenge of Detection

It’s 2025! If Apple can release a new iOS every few months, the governments and tech platforms can develop regulations just as quickly. An effective way is to implement a global requirement. Whenever someone watches an AI video, a visible watermark or a pop-up should appear to let viewers know. An amber alert is used to keep us aware and alert. Simple, easy, and very effective. Having pop-up notifications will promote awareness and safety in a speedy digital world.

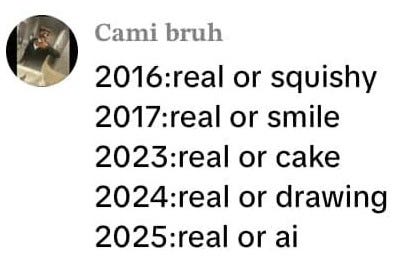

Yes, some AI content is fake or entertaining. For instance, the viral “Dad Joke” sitcom series. It’s AI-made and funny, but not every video is easy to identify. That’s the real problem: technology updates are still playing duck, duck, goose without the goose. As AI evolves, the difference between real and fake will become harder. Without regulations or tools to detect, society becomes a world of imbalance of trust.

How to Spot an AI Video (While You Still Can)

Below, I’ve included examples of AI-generated videos. Spend a few moments watching them all. Make mental notes of what looks odd. After, look at the list below to see how accurate you were.

Did You Notice?

Unnatural facial movements (Eye twitching, lip sync, and expressions are delayed).

Inconsistent shadow

Blurry edges (neck, hands, shoulders, or jaw are odd in motion)

The background is static, or people in the background move inconsistently.

Crispy clear with static grains.

AI characters often have the same expressions when expressing happy/shocked emotions (open wide eyes).

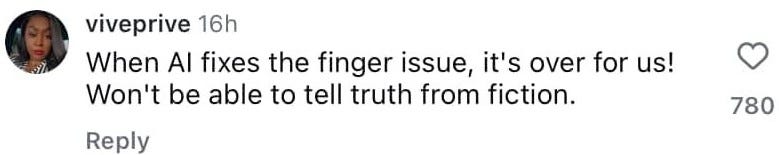

An added body part (right now, it’s an extra finger or extra hand).

⚠️Right now, these flaws show if you look closely. Don’t count on that being the case much longer. Always apply present knowledge when engaging with AI-generated content. Question yourself, always confirm what you’re watching with three accurate sources. AI is progressing fast.

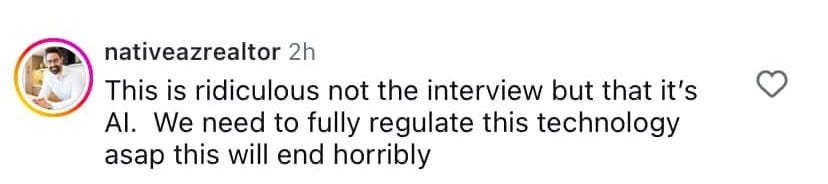

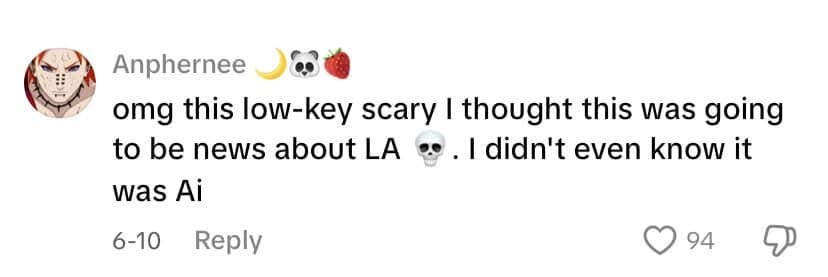

Public Commentary: We’re All Noticing

Just scroll through the comments. You’ll see things like:

People are catching on — but not fast enough for the government to take action. That’s why this article, these side-by-side visuals, and this conversation need to happen now. I was fooled twice by watching an AI video. Ever since, I’m now frightened, confused, and sometimes, I’m not able to detect what’s real and fake.

The Case for Mandatory Disclosure

AI-generated videos and pictures should display a clear, unavoidable label or watermarks. Without an obvious tell-tell sign, many viewers who aren’t technologically advanced won’t notice. The human brain can only process so much. Having a disclosure won’t ruin entertainment or slow down future innovation. It’s about being honest with consumers and keeping us informed, safe, and ready to critically understand what we are watching.

What That Will Look Like

Every deepfake or AI-generated video should automatically come with a watermark or a visible label. This label should be easy to read and impossible to ignore (no tiny text in the corner). If there is a case where the video is AI and it doesn’t have a label, then it’s the person sharing it who is responsible for disclosing it before sharing.

To make this effective, there needs to be consequences. For example, anyone using AI-generated content for a harmful purpose (ads, fake news, hate speech, blackmail, violence, fraud, replication) should face a base penalty of $500, which would triple with each repeated offense. In addition, if AI-generated content is used sexually in all aspects (creation, selling, exploitation, and abuse) should face jail time and a fine of 50k, depending on severity, this will increase.

This kind of regulation wouldn’t be unparalleled. When TikTok first launched, influencers posted sponsored content without labeling it as an AD. Their watchers (fans, subscribers, viewers) were manipulated without their knowledge. Eventually, that change; now influencers are required to disclose paid partnerships. The Federal Trade Commission had always helped guide influencers on how to avoid legal consequences. Why? It’s because transparency gives people space to pause and think, rather than being subconsciously persuaded. If that kind of regulation exists for advertising, why can’t the same be applied to AI-generated videos? I would agree that when dealing with AI, the deception is far more dangerous.

Summary and Awareness

I understand, it’s amazing to see how far the internet and technology have come. We now have robots that can handle basic human tasks. AI can help with schoolwork, generate logos, and design content. It’s fun and convenient. It saves time.

But with that convenience should come responsibility, and the next step forward should be global transparency. We can’t keep consuming content without knowing where it came from or who created it.

Right now, the water still feels shallow. Possibly in a few months, a few years, a few decades? It will be deep. Very deep.

So stay aware. Pay attention to what you’re seeing on your, ForYou page, Reels, and YouTube feed.

Because not everything you’re watching… is real.